Shipping Events from Fluentd to Elasticsearch

We use fluentd to process and route log events from our various applications. It’s simple, safe, and flexible. With at-least-once delivery by default, log events are buffered at every step before they’re sent off to the various storage backends. However, there are some caveats with using Elasticsearch as a backend.

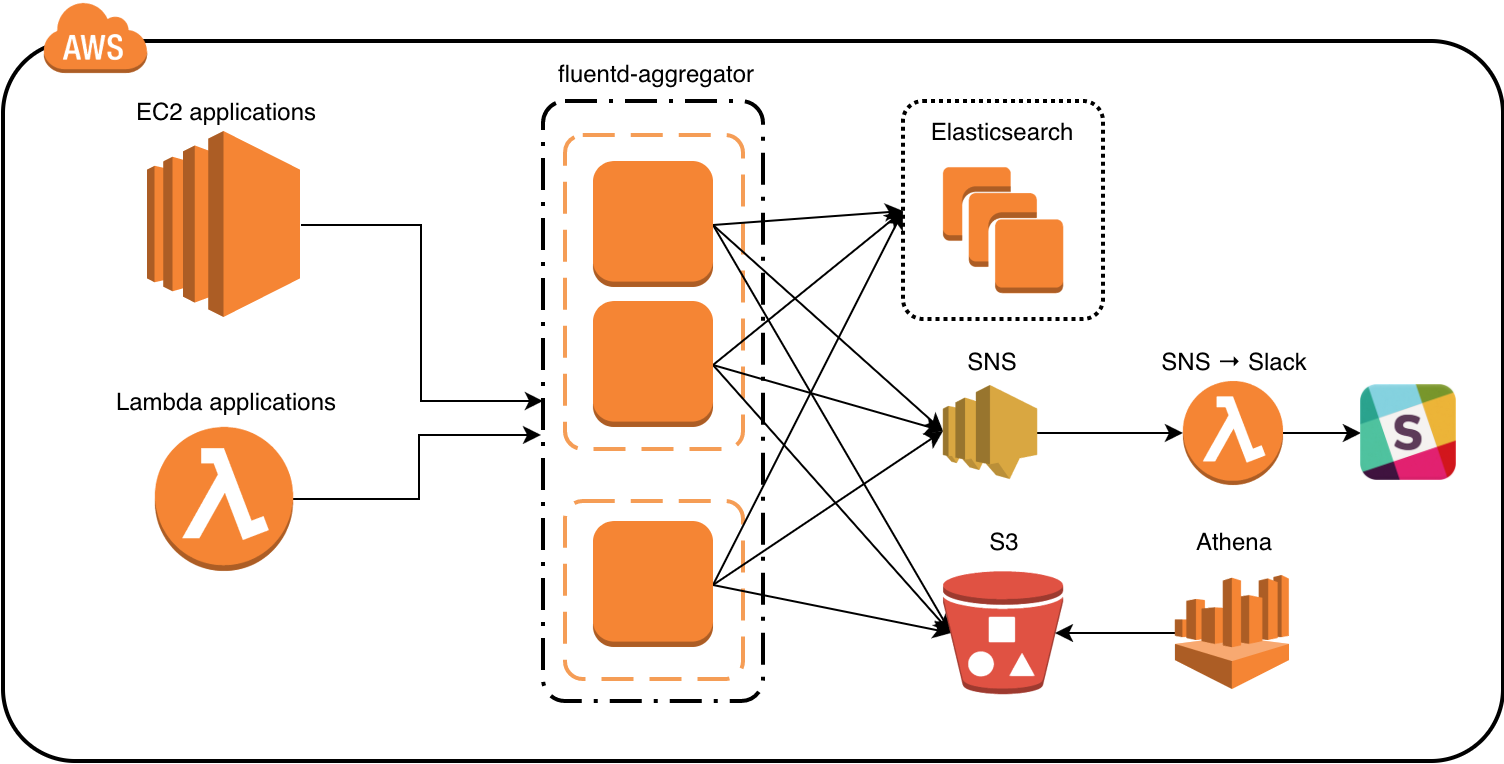

Currently, our setup looks something like this:

The general flow of data is from the application, to the fluentd aggregators, then to the backends – mainly Elasticsearch and S3. If a log event warrants a notification, it’s published to a SNS topic, which in turn triggers a Lambda function that sends the notification to Slack.

The fluentd aggregators are placed by an auto-scaling group, but are not load balanced by a load balancer. Instead, a Lambda function connected to the auto-scaling group lifecycle notifications updates a DNS round-robin entry with the private IP addresses of the fluentd aggregator instances.

We use the fluent-plugin-elasticsearch plugin to output log events to Elasticsearch. However, because this plugin uses the bulk insert API and does not validate whether events have actually been successfully inserted in to the cluster, it is dangerous to rely on it exclusively (thus the S3 backup).