Edit 2016/4/29 I have written a follow-up piece to this blog post.

As many of you probably know, I am a professional programmer. I started my professional career with WordPress and PHP development, and now I find myself doing a lot of Ruby work. I am still in the very early stages of my professional career — I have only been doing this for about 5 years. There are people who are much more experienced than I am, and there is a whole world of things that I have yet to learn and experience.

Until recently, I’ve been relatively reluctant to change. I just wanted to get something done, using the tools I’m most familiar with. In the first few years of my career, that was PHP. After learning and getting used to Ruby and Rails, that’s been my go-to language and framework.

However, I’ve decided to throw caution in the wind, and choose a new language that I’m going to be using going forward: Elixir.

Every programming language has something that irks somebody. The thing that irks me about Ruby is its lack of a really robust concurrency model. There have been experiments in EventMachine — evented I/O like Node, Rubinius and JRuby — real threads, Unicorn — forking (!). But none of these solutions are particularly elegant. Relying on forking for concurrency, even with copy-on-write, is not efficient at all. (Read more about concurrency and Ruby: Matz is not a threading guy)

Enter Elixir. Elixir is a language that runs on top of the Erlang VM. The Erlang environment is something special, I think. It’s built from the ground up with concurrency and reliability in mind (it was developed for use in telecom systems). Originally a proprietary language at Ericsson for a little over 10 years, it was open-sourced in 1998. Elixir builds on this very mature ecosystem, and makes it accessible enough for “mere mortals” to build high-performance applications.

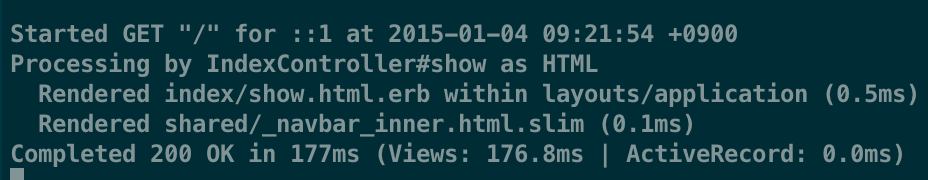

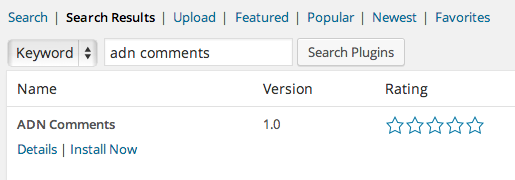

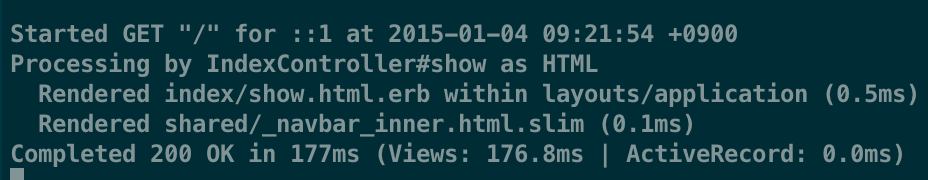

When I first tried Elixir out to build a web app, like you would with Rails, I was pretty surprised. If you’re used to Rails, you’ve seen this log message:

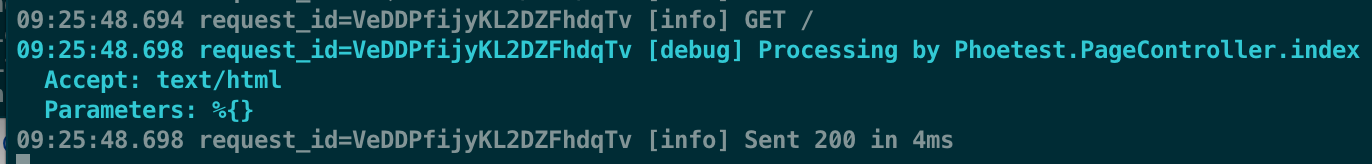

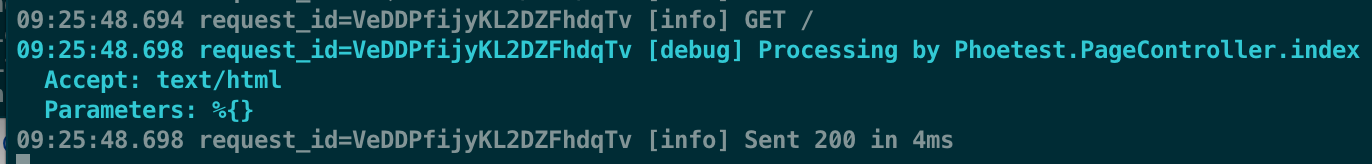

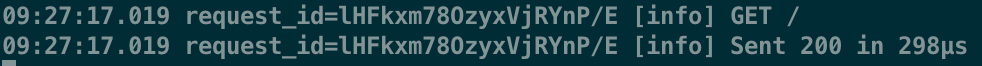

This is the Elixir / Phoenix framework equivalent:

170ms down to 5ms. And that’s in development mode. Switch it to production mode, and this is what you see:

5ms down to 300 microseconds.

Granted, this is a very simple page. It’s basically a “Hello World” benchmark, but it certainly piqued my interest. This amount of performance, coupled with the ability to maintain it even under immense load, is one of the factors going in to my choice of Elixir.

The other factor is, what I believe, the direction of the Internet. We’re moving away from servers rendering static HTML and sending that over the wire, and to more dynamic content powered by client-side JavaScript or native code on mobile devices. Elixir and Erlang handle this beautifully — dutifully keeping thousands and thousands of open WebSocket sessions alive without breaking a sweat. You should take a look at how Whatsapp uses one machine running their chat backend on Erlang to service more than 2 million simultaneous connections.

By investing my time now in Elixir and Erlang, I believe that I’m essentially getting ready for the next 20 years of the Internet. And I’m excited.